Recently VMware annouced that Cloud Foundation would support external storage connectivity, when I first heard this I thought to myself are VMware out of their minds? Amongst customers who I meet and talk to more or less on a daily basis, I also spoke to a lot of customers at VMworld about this but the stance or direction from VMware has not changed, if you want a truly Software Defined Data-Center then vSAN is the storage of choice.

So why have VMware decided to allow external storage in Cloud Foundation?

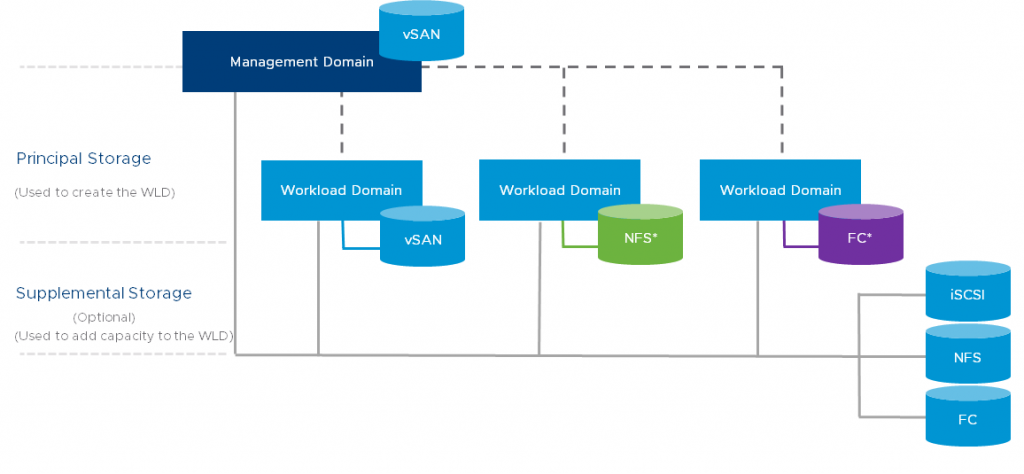

Many customers who are either about to start or have already started their journey to a Software Defined Data Center and/or Hybrid Cloud are still using existing assets that they wish to continue to sweat out, it may well be that their traditional storage is only a couple of years old and VMware are not expecting customers to simply throw out existing infrastructure that was either recently purchased or still being sweat out as that would be a tall ask right?

Customers also still have workloads that can’t simply be migrated to the full SDDC, they take some time to plan the migration, maybe there is a re-architecture of the workload that needs to be performed, or maybe there’s a specific need where a traditional storage array has to be used until any obstacles have been overcome, VMware recognises this hence the support for external storage in Cloud Foundation.

There are also specific use cases where Hyper Converged powered by vSAN isn’t an ideal fit, cold storage or archive storage is one of these, so supporting an array that can provide a suplemental storage architecture to meet these requirements is also a plus point.

Will I lose any functionality by leveraging traditional storage with Cloud Foundation? Simple answer is yes for the following reasons:

- No lifecycle management for external storage through SDDC Manager which means patching and updating of the storage is still a manual task.

- No single pane of glass management out of the box for external storage without installing third party plugins which in my experience have a tendancy to break other things.

- No automation of stretched cluster deployment on external storage, all the replication etc has to be configured manually.

- Day-2 operations such as Capacity Reporting, Health Check and Performance Monitoring are lost without installing any third party software for the external storage.

- Auto deployment of the storage during a Workload Domain deployment, all the external storage has to be prepared beforehand.

- Losing the true “Software Defined Storage” aspect and granular object control, currently external storage support for VVOLS is not there right now either.

Also remember that with Cloud Foundation, the Management Workload Domain has to run on vSAN, you can not use external storage for this.

So if you want a truly Software Defined Data Center with all the automation of deployment, all the nice built in features for day-2 operations then vSAN is the first choice of storage, if you have existing storage you wish to sweat out whilst you migrate to a full SDDC then that’s supported too, and yes you can have a vSAN cluster co-exist with external storage too, which makes migrations so much easier.

The other aspect to look at this is in a Hybrid Cloud or Multi Cloud strategy, Cloud Foundation is all about providing a consistent infrastructure between your private and public clouds, so if your public cloud is using Cloud Foundation with vSAN then the logical choice is to have vSAN as your storage for your Cloud Foundation Private Cloud to have that consistent infrastructure for your workloads no matter where they may run.